Response to EC Consultation on Draft G7 Guiding Principles for Organizations Developing Advanced AI systems

The European Commission ran a short consultation on the draft G7 Hiroshima ‘Guiding Principles for Organizations Developing Advanced AI systems’ asking for comments on 11 draft principles, and comments on additional principles. Connected by Data provided the following response.

The consultation asks whether the principles should apply to organisations developing, deploying or distributing advanced AI systems.

Missing principles

We proposed an additional principle:

Principle: Support the involvement of diverse affected communities in governance and oversight across the AI lifecyle

This includes adopting participatory & deliberative methods to ensure feedback from users and affected communities about the impacts of an AI systems are heard and responded to by appropriate teams, including at the highest levels of organisational decision making. Organisations should regularly review published evidence on public attitudes to AI, & should support additional research, as well as establishing their own formal, open & accountable structures for input.

Comments on draft principles

We provided the following comments on the draft principles (provided as quoted text for reference)

Principle 1: Take appropriate measures throughout the development of advanced AI systems, including prior to and throughout their deployment and placement on the market, to identify, evaluate, mitigate risks across the AI lifecycle.

This includes employing diverse internal and independent external testing measures for advanced AI systems, through a mixture of methods such as red-teaming, and implementing appropriate mitigation to address identified risks and vulnerabilities. Testing and mitigation measures should for example, seek to ensure the robustness, safety and security of systems throughout their entire lifecycle so that they do not pose unreasonable risks. In support of such testing, developers should seek to enable traceability, in relation to datasets, processes, and decisions made during system development.

Principle 1 should specifically reference the need for methods that consult with and involve affected communities in the process of identifying risks and vulnerabilities, and designing mitigations. It should also reference use of established human rights impact assessment and equality impact assessment tools.

Principle 2: Identify and mitigate vulnerabilities, and, where appropriate, incidents and patterns of misuse, after deployment including placement on the market.

Organizations should use, as and when appropriate, AI systems as intended and monitor for vulnerabilities, incidents, emerging risks and misuse after deployment, and take appropriate action to address these. Organizations are encouraged to consider, for example, facilitating third-party and user discovery and reporting of issues and vulnerabilities after deployment. Organizations are further encouraged to maintain appropriate documentation of reported incidents and to mitigate the identified risks and vulnerabilities, in collaboration with other stakeholders. Reporting mechanisms to identify vulnerabilities, where appropriate, should be accessible to a diverse set of stakeholders.

Principle 2 should include a reference to mandatory public disclosure of reporting of post-deployment incidents.

Principle 3: Publicly report advanced AI systems’ capabilities, limitations and domains of appropriate and inappropriate use, to support ensuring sufficient transparency.

This should include publishing transparency reports containing meaningful information for all new significant releases of advanced AI systems.

Organizations should make the information in the transparency reports sufficiently clear and understandable to enable deployers and users to interpret the system’s output and to enable users to use it appropriately, and that transparency reporting is supported and informed by robust internal documentation processes.

Principle 3 should be stronger, placing an obligation on AI System developers/distributors/deployers to ensure and verify that users have clearly understood the capabilities and limitations of the AI systems they are using. Actors should be able to provide clear evidence that users have adequate understanding before any liability for misuse transfers to users.

Principle 4: Work towards responsible information sharing and reporting of incidents among organizations developing advanced AI systems including with industry, governments, civil society, and academia.

This includes sharing information responsibly, as appropriate, including, but not limited to, information on security and safety risks, dangerous, intended or unintended capabilities, and attempts AI actors to circumvent safeguards across the AI lifecycle.

Principle 4 should be a commitment to responsible information sharing, rather than to ‘work towards’.

Principle 5: Develop, implement and disclose AI governance and risk management policies, grounded in a risk-based approach – including privacy policies, and mitigation measures, in particular for organizations developing advanced AI systems.

This includes disclosing where appropriate privacy policies, including for personal data, user prompts and advanced AI system outputs. Organizations are expected to establish and disclose their AI governance policies and organizational mechanisms to implement these policies in accordance with a risk based approach. This should include accountability and governance processes to evaluate and mitigate risks throughout the AI lifecycle.

Principle 5 should note that a risk-based approach should include prioritising actions on risks that may generate or compound harms for particular minority groups, in addition to looking at societal level risks. It should include a commitment to transparent and consultative approaches to assessing risk and carrying out risk-based prioritisation, creating opportunities for affected communities to provide feedback on risk and harms.

Principle 7: Develop and deploy reliable content authentication and provenance mechanisms such as watermarking or other techniques to enable users to identify AI generated content

This includes content authentication such as watermarking and/or provenance mechanisms for content created with an organization’s advanced AI system. The watermark or provenance data should include an identifier of the service or model that created the content, but without including user information. Organizations should also endeavor to develop tools or APIs to allow users to determine if particular content was created with their advanced AI system.

Organizations are further encouraged to implement other mechanisms such as labeling or disclaimers to enable users, where possible and appropriate, to know when they are interacting with an AI system.

In Principle 7, rather than using the language of “where possible and appropriate” labeling and disclaimers should be treated as standard and default, and potential exceptions to this (e.g. interaction with AI systems in certain policing or national security contexts) set out explicitly.

Principle 9: Prioritize the development of advanced AI systems to address the world’s greatest challenges, notably but not limited to the climate crisis, global health and education

These efforts are undertaken in support of progress on the United Nations Sustainable Development Goals, and to encourage AI development for global benefit.

Organizations should prioritize responsible stewardship of trustworthy and human-centric AI and also support digital literacy initiatives.

Principle 9 should focus on making AI resources and skill-sets widely available as public good resources to researchers and institutions already focussed on priority issues of sustainable development. The current framing risks (a) allowing AI firms to capture and control supply-chains for addressing global challenges; (b) trying to solve problems with AI, rather than starting from deep understanding of the problems and supporting sensitive, responsible and appropriate adoption of AI; (c) cultivating a narrative of ‘ethical offsets’ in which use of AI for public good in one setting is treated as a justification of it creating disbenefit and harm in others.

Due to limits of space for consultation responses, we did not provide comments on principle 6 (cybersecurity), principle 8 (research and investment to mitigate risks), principle 10 (advancing technical standards) or principle 11 (data input controls and audits).

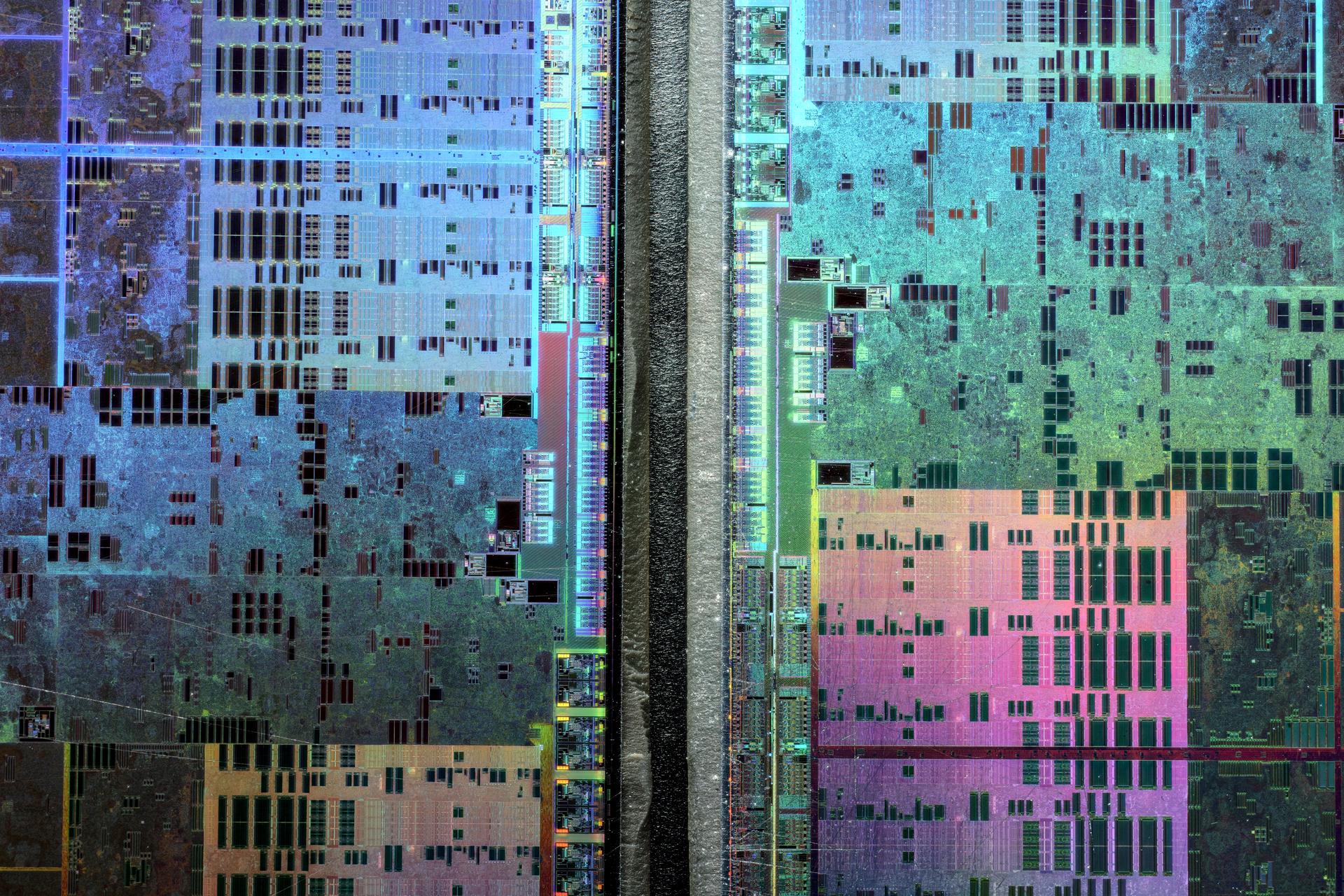

Post image credit: Fritzchens Fritz / Better Images of AI / GPU shot etched 5 / CC-BY 4.0