What do public attitudes to AI in education teach us about technology in public services?

The Department for Education has recently released public attitudes research on what parents and pupils think about AI in education, as part of its announcement of a £4m investment to create a dataset to support building AI tools. This is a bit of a hangover from the previous government (the work was carried out earlier in 2024), but reflective of the current government’s commitment to maximising adoption of AI across the public sector.

Before I dig into the details, I should first say that it’s fantastic to see public sector organisations carrying out public attitudes research to inform how they approach the adoption of AI. This kind of research can be used to prioritise investments, inform governance processes to address anticipated harms, and identify barriers and blockers to adoption, as well as working out how to communicate about governmental plans.

Here I want to pull out some specific insights from the research that highlight considerations for how technology is rolled out for public services, namely about profit sharing; schools as trusted decision makers; and points about equity and choice. Then I’m going to discuss some lessons that should be taken into future similar public engagement exercises, particularly about shifting understanding and acceptance of technology; consulting teachers and workers; and the overall approach we need of “you said, we listened”.

Insights

This public attitudes research was jointly commissioned with the Responsible Technology Adoption Unit (RTA – CDEI as was), a unit of DSIT, and carried out by Thinks Insight and Strategy in late February / early March 2024 (possibly under the predecessor of this four year £3m communications contract). It comprised two online two-hour workshops (with the same set of 36 parents) and three in-person six hour workshops (each with 12 parents and 12 of their children) in Bristol, Birmingham and Newcastle (a total of 72 parents and 36 pupils).

There’s a lot in the report, and it’s worth a start-to-finish read if you’re into this kind of thing. I’m just going to pull out a few things that caught my attention because of the wider implications to the adoption of AI across the public sector.

Profit sharing

Participants in these dialogues explored how to ensure that a portion of the profits made by private sector companies using public data (by which I mean here data about the public, collected in the course of the delivery of public services) could be reinvested into the education sector. The report says:

There was widespread consensus that, if profit were to be generated through the use of pupil’s work to enhance AI in education, schools would be the preferred beneficiaries, and resistance to the idea of tech companies being the sole profiteers.

Generally, parents and pupils acknowledged that pupils profiting individually from the use of their work and data would not be feasible, but almost all strongly believed that any profits derived from this data use should be distributed among schools to enable pupils to benefit. This belief was intensified by the understanding of intellectual property and pupils’ ownership of their work and data. Participants suggested a minimum share of the profits being handed back to schools, but views on how this should be done varied, with many feeling this should be done to maximise equality of access to AI (with profits being used to fund AI tools and resources for schools who are not able to do this themselves), while others felt profits should be equally shared. Few participants thought profits should correspond to each school’s level of data sharing and AI use, and participants were especially positive about profits being used to level the playing field for schools.

While participants did want schools to profit from AI use, some felt this could happen through profits being used by local authorities or regional bodies to improve education in the area, or by DfE to improve the education sector at a national level, rather than being distributed to individual schools. Most were comfortable with profits being shared between schools and DfE, however, the general assumption was that pupils would benefit most directly if profits were distributed to individual schools.

Participants accepted that tech companies would profit in some way from the use of pupil work and data, but the consensus was that they should not be the sole beneficiaries. Parents of children with SEND [special educational needs and disability] were particularly negative about AI tool development becoming a money-making exercise. Understanding of how exactly tech companies could profit was limited, with most assuming that they would make money by selling pupils’ data to third parties. There was a lack of awareness of other ways in which they might benefit from this data use such as by developing other AI tools for commercial use. On prompting, this form of benefit was generally seen as acceptable if used to develop educational tools for use outside the education sector, but unacceptable if used to develop tools for other purposes. This possibility was seen as misusing data for something other than its intended use, reflecting existing discomfort and concerns about data being sold by tech companies without participants’ knowledge or agreement.

This discomfort with profiteering seemed to stem, in part, from general distrust of tech companies:

Trust in tech companies was extremely limited and there was little to no support for them to be granted control over AI and pupil work and data use.

Profit was almost universally assumed to be the primary or sole motivation of tech companies, rather than the desire to improve education and pupil outcomes. Reflecting starting views of tech companies as non-transparent and assumptions that data is sold on to third parties, participants did not trust them to protect or use data responsibly. Parents and pupils assumed that given free rein and with no oversight, tech companies would choose to sell data on to other companies with little concern for pupil privacy or wellbeing.

And this distrust in tech companies in part stemmed from an assumption that any data shared with them would be sold to others, with potentially detrimental consequences for pupils:

However, participants were deeply concerned about the security of this data, especially special category data, fearing that any breaches would result in comprehensive datasets about individual pupils’ demographics, abilities, and weaknesses being shared more widely and exploited. This was a particular worry for parents of children with SEND, for whom concerns centred around their children’s future opportunities. They were particularly concerned that their child’s SEND status could be shared between government departments which could impact the benefits their child might be entitled to, or about future employers accessing their child’s data via the companies developing AI, impacting their child’s future.

This echoes our findings when we interviewed people about the role of tech companies in the health sector, as well as wider studies of public attitudes on technology. It seems as though distrust stems from common media narratives about the behaviour of the largest tech companies – particularly in their use of personal data for targeted advertising – and the suspicion spreads like a contagion on to other, smaller, firms.

This challenge of how value created from public data is returned to benefit the public is a tricky one. There are various ways of approaching it – charging for data access; public/private partnerships or equity stakes; targeted / hypothecated or general taxation; even restricting access to public data to the public sector or civic technologists. In her paper on Rethinking the social contract between the state and business, Mariana Mazzucato recommends introducing conditionalities:

Conditionalities are one powerful tool that governments can use to co-shape investment and cocreate markets with the private sector. When companies benefit from public investments in the form of subsidies, guarantees, loans, bailouts or procurement contracts, conditions can be attached to help shape innovation and direct growth so that it achieves the greatest public benefit. For example, procurement can be made conditional on greener supply chains, reinvestment of profits and better working conditions.

Importantly, there are different types of conditionalities governments can leverage and this working paper addresses four of them: (1) conditionalities related to access, where equitable and affordable access to the resulting products and services is ensured; (2) conditionalities related to directionality, notably related to the green transition, where firms’ activities are directed towards climate-friendly goals, and they intentionally use green options and reduce negative environmental impacts, and to the improvement of labour conditions, where productive employment opportunities are created by firms, measured not just in quantity but also in quality, and diversity and equity are embraced; (3) profit-sharing conditionalities, where profitable firms share royalties or equity with government and may be incentivised to leverage their profits through acquisition of government shares; and (4) conditionalities related to reinvestment, where profits gained are reinvested into productive activities and R&D for longer term benefit, avoiding financialisaton; (Laplane and Mazzucato 2020). In the context of the revival of industrial policy across nations, it is critical to reflect on how best to build accountability and alignment with key policy goals into government support.

This thinking helpfully expands beyond financial exchanges and makes me think of conditionalities around improving and supplementing data, or requiring companies to provide access to the results of analyses, or to enable researcher and civil society access to compute over the data.

To my knowledge there has been no serious attempt to explore the implications of different approaches to ensuring we all benefit from companies accessing public data on either the innovation ecosystem, or public trust. If plans for a national data library – particularly of a sort that supports AI innovation – are to come to fruition, this is an issue that will have to be faced head on.

Schools as trusted decision makers

Participants were asked who they trusted to make decisions about the use of pupil work and data in AI systems, and schools came out top:

Schools were most trusted to make decisions about the use of pupils’ work and data, as well as to hold data that was seen as more sensitive (such as SEND data or pupil identifiers). Where concerns about school involvement existed, they were centred around unequitable AI use and access.

Parents and pupils felt that schools could be relied on to make decisions in the best interest of pupils and to prioritise educational outcomes and safety over other considerations like AI development and profit. Central to this trust was the widely held perception that schools are not primarily profit-motivated and are already trusted to safeguard pupils, which led to the assumption that schools can be relied upon to continue doing this when it comes to AI. As a result, participants wanted schools to have the final say in how pupil work and data is used, with the ability to approve or reject uses suggested by the government or tech companies if they are felt to harm pupils or jeopardise their privacy and safety.

Schools were also trusted to hold pupil data, with many who were uncertain about special category data being shared and used feeling reassured about this data being collected if schools could control its use and guarantee that it would not be shared beyond the school.

In comparison, while the participants saw a role for the government in setting standards, they had concerns about big centralised (or linked) databases controlled by the state:

Parents also worried about how pupils’ performance and special category data (such as SEND status) could be used by government if held in a central database accessible beyond DfE. There were also concerns around how particular agendas might determine the content used to optimise AI and therefore how and what AI tools teach pupils.

This was a particular concern for parents of children with SEND, who worried that their children’s future could be affected if pseudonymised or personally identifiable data is held and accessed by government beyond their time at school. They required reassurance that data showing their children’s level of ability and any SEND would not be used in future, for example to affect their entitlement to government assistance.

Many parents also generally worried about increased surveillance if provided with data on children throughout their formative years, particularly if AI use becomes standard and most or all of the population’s data in this context is held and used by a limited number of central organisations.

There is a tension here between the government’s vision of integrated, personalised digital public services, supported by a Digital Learner ID and data sharing across the public sector, and the public’s fears of an overbearing, authoritarian, “Big Brother” state.

It seems unlikely that these fears are due to a large proportion of the public being involved in fraud that they’re worried might be detected. In part, there is a communication problem: information about the sharing of data between departments often hits the media as scandals – see for example the stories about the Department for Education sharing data with the Home Office and DWP – rather than through proactive transparency. But some concerns are legitimate: there are consequences when inaccurate information ripples through government systems; when data is misinterpreted due to being removed from its original context; or when governments change, and start to use data in unacceptable ways that are most damaging to already minoritised, marginalised and vulnerable groups.

There are neat data-architectural and user-facing designs that could help mitigate these risks and build trust, but it’s not clear to me whether/how DSIT/GDS is considering these. Personally I would be shouting about safeguards from the rooftops to avoid GPDPR-like backlashes.

There is also a tension between the expectation that local institutions – such as schools, hospitals, police forces or local authorities – could make well-founded and context-sensitive decisions about which apps they adopt and how public data gets shared, and the reality of the capacity of those organisations to grapple with ethical decision making, good governance and good procurement, and their (lack of) power to influence the products that tech companies offer them.

In our design lab on EdTech, carried out in partnership with Defend Digital Me, we dug into the different layers of governance where decisions need to be made and who should be making them. This includes those closer to level of the individual school – such as the local authority for schools they run; multi-academy trusts; or school improvement partnerships / clusters – as well as more centralised (but independent of government) institutions, such as Ofsted and BECTA (as was). These kinds of organisations have more capacity to be able to at least offer guidance to individual schools, so they’re not having to do all the heavy lifting.

(An interesting comparison here is with GPs, who do take controlling the sharing of patient data relatively seriously, but also use data access as a bargaining chip in their contract negotiations with the NHS, thanks to the collective capacity and power provided by the BMA.)

So while I personally think more devolved decision making is the right model to adopt, local institutions need a lot more support to be able to take on a data stewardship or AI governance role effectively. Any tech adoption drive needs to fill these tech-adjacent capability gaps.

Equity and choice

The participants were pretty canny in identifying the potential risks of AI in education, including inequities arising from differential adoption of AI across the education system:

Parents and pupils were concerned that AI use would exacerbate existing inequalities in society.

Almost all participants felt that if AI could indeed support children’s learning and potentially give them a head start, there should be equal access to it for all schools. Within the current education system, they assumed that the best AI tools would only be accessible to the schools that could afford it. They felt this would exacerbate existing inequalities, add to the unfair advantage of those who are better off, and lead to further stratification – of the education system, but also of the labour market and society as a whole. Parents of pupils who attend schools that are struggling or in disadvantaged areas felt resigned to inequality getting worse, with AI tools just another resource their child could miss out on.

There was also some concern about variation in teachers’ abilities to use AI to its full potential, at least at first. Both parents and pupils worried that if training and support wasn’t provided to ensure all teachers meet a minimum level of proficiency with AI tools, some pupils would benefit less from AI use than others.

As a result, many felt that the introduction of AI tools in schools should be centrally coordinated and funded, with tools standardised and quality assured, and profits from selling pupils’ work and data reinvested into the school system.

At the same time, the participants also described a model where schools should be given control about whether or not to adopt AI technologies:

A few parents noted that schools may not all choose to use AI, or that there could be disparities within schools if it were left up to teachers’ discretion and some refused to integrate AI into their teaching. Some worried that schools with fewer resources would be left behind as other schools (such as private schools) adopted AI use to their advantage. There was also a minor question about the impact schools’ teaching philosophies might have on the decision to use AI or not, for example whether religious schools might choose not to use a standardised AI tool in order to have control over what exactly students learn.

However, there was little real concern about schools’ oversight of AI tools and pupil work or data, with most participants feeling the more control schools have, the better.

While the report doesn’t really separate control over pupil data being gathered for reuse and control over pupils using AI tools, it does mention that participants thought parental permission should be sought before pupils used personalised AI tools, mostly because of the data sharing implications.

This is another tension that is hard to resolve. Leaving people and public bodies to make their own choices about whether and how to adopt technologies is likely to exacerbate existing inequalities (if we believe those technologies are overall beneficial). On the other hand, removing freedom of choice is likely to both prompt resistance and remove an essential driver for technological innovation and improvement.

I think this leaves the government with two possible approaches: constrain the top or boost the bottom.

If it wanted to constrain the top (which I doubt it does), it could set some basic constraints that ensure it isn’t possible for disadvantaged schools to make really bad choices, or fall too far behind. In education, that might include setting standards for edtech tools; drawing red lines around which kinds of tools schools are permitted to use; or even doing things like adjusting the curriculum and qualifications standards such that using AI is not as advantageous. This is essentially what the participants suggested:

Parents and pupils stressed that all schools should have access to the same, quality assured, AI tools. Many suggested this could be provided by certification processes sanctioned by schools and the government, with only AI tools that are officially tested and meet a minimum performance standard being approved for use in education. For many, this would alleviate concerns about some pupils or schools benefitting over others by accessing more developed AI tools than others.

The more politically acceptable approach – because it puts fewer constraints on innovation, which is seen as essential for economic growth – is likely to be to boost the bottom. That would entail targeting the development and adoption of AI on schools in the most disadvantaged areas, so that they are the ones most advantaged by AI, rather than least. In practice this might look like ensuring investments like innovation prizes and hackathons are oriented towards support for those lower-capacity contexts; requiring higher-capacity schools to share resources with lower-capacity ones; building staff capacity through targeted training courses and so on.

Now I don’t know much about education, but I imagine that if you’re in a struggling school, you’re more likely to be worrying about your ceilings falling in, or teacher recruitment, or supporting pupils with mental health problems and special educational needs than adopting AI. But perhaps there are ways for AI to help with those – who knows.

Lessons

On to some thoughts about the public attitudes research itself. The report already identifies some methodological reflections, including about how to retain engagement when conducting this kind of research with children, and the utility of spreading out sessions over time. I want to reflect on a few others.

Shifting understanding and acceptance

There are a few nuggets within the research that suggest something we observed in the People’s Panel on AI: that given most people are starting from relatively limited practical experience with tools that they would identify as AI tools, introducing and informing them about current AI uses both increases their understanding of the technology (upsides and downsides), and at least initially increases their interest in, and positive attitude towards it.

Although there was confusion about how exactly AI tools would learn from pupils’ work, parents and pupils still felt pupil work was fine to share. By the end of the engagement, both parents and pupils understood that providing a wide range and quality of work would improve AI outcomes in the long run. As a result, they accepted data sharing as a necessity.

But some of how the report reads makes me wonder how this acceptance was achieved. It might be because of the structure of the report, or of the sessions, but some of it sounds as though parents and pupils brought up concerns that were then challenged by the facilitators so that they would change their minds.

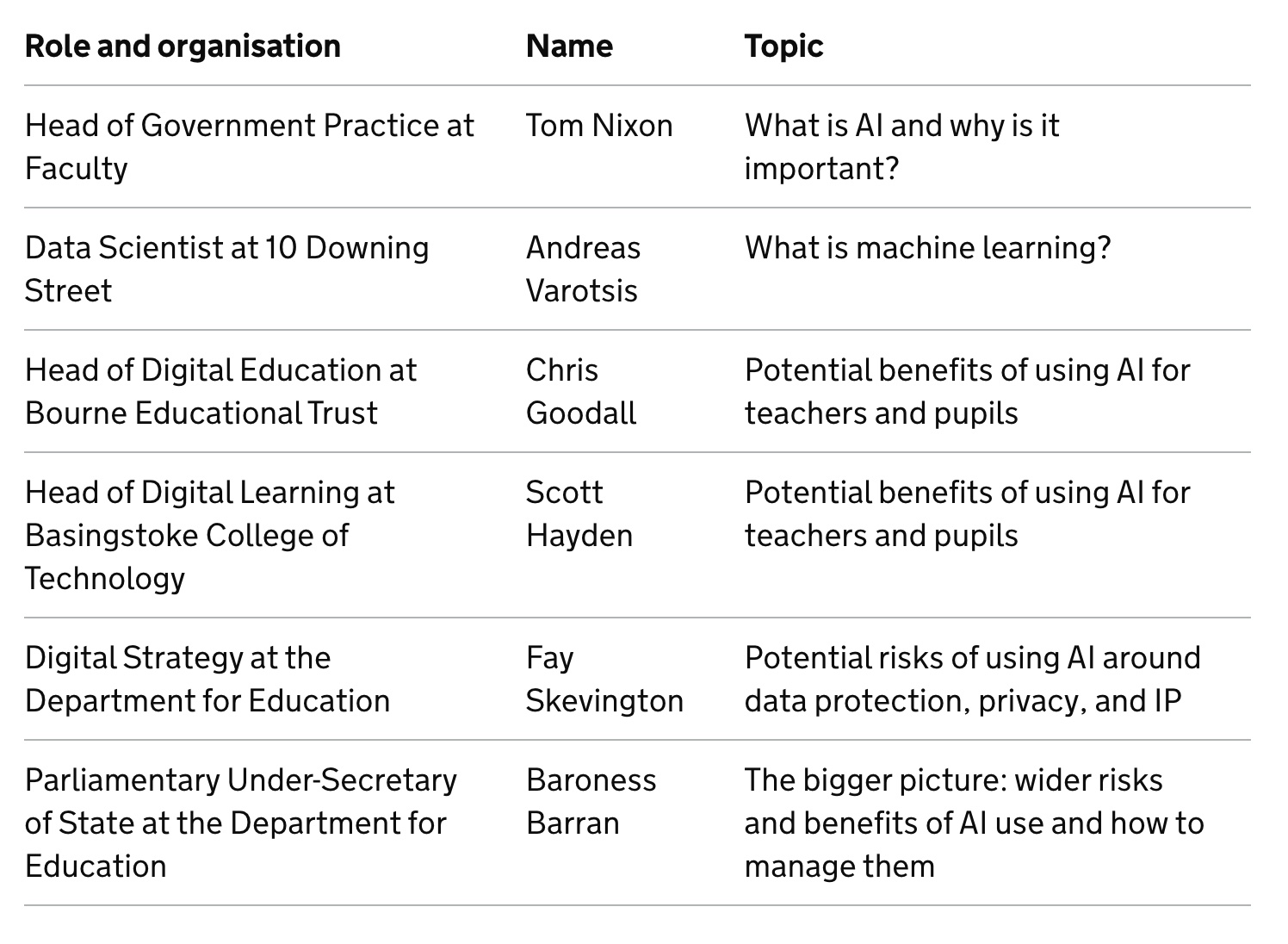

I also always check with these kinds of dialogues who exactly the participants heard from, and what they heard. In this case, the appendix lists some videos they were shown (they didn’t have a chance to question or discuss things with these witnesses):

What I’ll observe here is there were a bunch of kinds of people the participants didn’t hear from, but who have expertise and opinions about the issues under discussion. That includes ordinary teachers (as opposed to those specialising in the use of digital technologies), union representatives, for example from the NEU or NASUWT, and civil society groups concerned about children’s data and digital rights such as Defend Digital Me or Five Rights.

Taking a step back, engagement exercises like this generally fall into three types. These often get blurred together, or research of one type is re-used (or mis-used or mis-represented) as being of another type. Those are:

- User research about the lived experience of members of the public in their interactions with a system, for example to identify the most important challenges or barriers they encounter, so that technology can be targeted to address those.

- Market research about public attitudes towards a particular policy or proposal, including testing messaging, so that future engagement and communications with the public can be more effective in creating acceptance.

- Public participation exercises where participants engage in deliberation with each other to make judgements about areas where there are ethical or moral questions, which then inform (or in very rare cases determine) political decision making.

This piece of research is market research about public attitudes. This comes through most clearly in the summary of dialogue (the only part most people will read), which is framed around which public concerns that policymakers pursuing a policy of increasing AI in education will have to address:

- Parents and pupils frequently share personal information online, often without considering the implications. The benefits and convenience of using online services, especially those that provide a tailored experience, tend to outweigh any privacy concerns.

- While awareness of AI among both parents and pupils was high, understanding did not run deep. AI is often associated with robots or machines, and fictional dystopian futures. Only some – those with more knowledge of or exposure to AI – thought of specific applications such as LLM-powered tools.

- As a result, views on the use of AI in education were initially sceptical – but there was openness to learning more. Initial concerns about AI in education were often based on a lack of understanding or imagination of how it could be used.

- Parents and pupils agreed that there are clear opportunities for teachers to use AI to support them in their jobs. They were largely comfortable with AI being used by teachers, though more hesitant about pupils interacting with it directly.

- By the end of the sessions, participants understood that pupil work and data is needed to optimise AI tools. They were more comfortable with this when data is anonymised or pseudonymised, and when there are clear rules for data sharing both with private companies and across government.

- The main concerns regarding AI use centred on overreliance – both by teachers and pupils. Participants were worried about the loss of key social and technical skills and reduced human contact-time leading to unintended adverse outcomes.

- The research showed that opinions on AI tools are not yet fixed. Parents’ and pupils’ views of and trust in AI tools fluctuated throughout the sessions, as they reacted to new information and diverging opinions. This suggests that it will be important to build trust and continue engagement with different audiences as the technology becomes more established.

There’s nothing at all wrong with doing this kind of research; indeed it’s vital to understand how people feel about proposed policies, and to use that to mitigate risks and hone communications.

But the research should be characterised accurately. In this case, the way this research is referenced – in a press release about a £4m investment to create a dataset that edtech developers can use to build tools for teachers – casts it as if a desire for teachers to use generative AI arose spontaneously from participants, or was the result of their careful consideration of all the evidence for and against different approaches (my emphasis):

It comes as new research shows parents want teachers to use generative AI to enable them to have more time helping children in the classroom with face-to-face teaching – supporting the government’s mission to break down barriers to opportunity. However, teachers and AI developers are clear better data is needed to make these technologies work properly, which this project looks to help with.

To be clear: the research doesn’t show parents want teachers to use generative AI. What it shows is that when parents and pupils are asked to consider which uses of AI would be acceptable, the kinds of uses they are most comfortable with are those that help and support teachers (I’ll dig into this a bit more below), as opposed to kids having personalised AI tutors.

The biggest perceived opportunity for AI use in education was to support teachers in generating classroom materials and managing feedback in more efficient ways. The perception was that this could reduce administration tasks and increase the attractiveness of teaching as a profession.

Across the workshops, parents and pupils felt most comfortable with teachers using AI as a tool to support lesson delivery (for example, by helping to plan lessons). They were less comfortable with the idea of pupils engaging directly with AI tools, as they wanted to ensure some level of human oversight and pupil-teacher interaction.

The parents and pupils weren’t asked what changes to education provision would make the most difference to their lives. They weren’t asked how to prioritise education funding or to picture their ideal future of education. They were asked “In which circumstances, if any, are parents and pupils comfortable with AI tools being used in education?” and “In which circumstances, if any, are parents and pupils comfortable with pupils’ work being used to optimise the performance of AI tools?”

Separately from how research is characterised, I do think there’s something interesting to consider here about how to carry out deliberative dialogues in a context where most people are coming in with a baseline level of fear and distrust, some of which is founded on misunderstandings about how the technologies under consideration would work. (I wish there’d been someone there to challenge misconceptions of the utility of pseudonymisation, for example!) I do think deliberations that answer people’s questions and address their concerns, or even challenge their misconceptions, can be useful mechanisms for improving understanding and increasing interest in and confidence about technologies. But for third parties to accept the legitimacy of the outcomes of such deliberations, they have to be confident that evidence and opinion are presented to participants in a balanced way. I can’t be sure that was the case here.

Consulting teachers and workers

Perhaps the biggest gap with this research is that the main conclusion – and the way it’s been presented – was about how teachers could use AI, but this piece of research didn’t actually ask teachers themselves. Nor did it bring together any evidence of the effectiveness of the tools that were being suggested on either teacher workload or learning outcomes (this being hard to gather before such tools are created). Consulting parents and pupils is a useful check that proposed uses are also going to be acceptable to them, but they’re not really in a position to be able to tell whether a given use is actually going to be helpful and beneficial to teachers.

However, this research is part of a larger initiative within the Department for Education to explore uses of Generative AI. This includes a call for evidence that took place last year; a series of hackathons run by Faculty, and some research exploring the technical viability of a tool to provide feedback to pupils about their work. (The R&D work by Faculty cost £350k by the way.)

Faculty’s user research identified the top five areas where teachers thought tools might help them save time as:

- Marking

- Data entry and analysis

- Lesson planning

- Differentiating [creating different materials for different learners]

- Writing reports

and the top five areas where teachers thought tools might help them improve their teaching practice as:

- Differentiating learning materials and approaches to meet different students’ needs

- Designing lesson materials

- Framing (or reframing) concepts

- Lesson planning

- Making sense of data

(Just to say, this illustrates my point that to understand what’s useful for teachers, you have to talk to teachers: parents and pupils didn’t talk about data entry and analysis as a potential tool that teachers might find useful, probably because that part of teachers’ work is invisible to them. Also, it shows the way in which more ‘magical’ tools win out over more straight-forward ones: one would have thought that tools for teachers to simplify data entry and analysis would be relatively simple, certainly compared to tasks like marking or lesson planning, so I’m a bit bemused that these aren’t the kinds of tools that DfE is focusing on. You could even use generative AI to build them if you needed that fairy-dust. But I guess they don’t seem as sexy, and potentially there’s a fear that highlighting the amount of data that teachers are already recording about pupils would cause public concern!)

Faculty found mixed perspectives from teachers on the acceptability of using AI for some of these tasks:

Some teachers raised concerns that using an AI tool for feedback would change the role of the teacher and this would affect the learning process in a significant way. Teachers also raised the importance of close human interaction to students’ personal development as well as their learning. This concern was not limited to giving feedback but was echoed by teachers and school leaders in discussions of other use cases, including lesson planning and writing student reports.

Teachers also raised concerns about whether using AI to support or replace elements of their role is the right thing to do in terms of best educational practice, as well as whether it is morally right. They were also uncertain about what other teachers, and parents or students would think if they were found to be using AI, as well as authorities such as their school leaders or Ofsted.

It’s not inconsistent to see particular tasks as both time-consuming and important parts of teaching practice, vital for building a good relationship between teacher and student. Thinking otherwise means you’re falling for the AI productivity myth: that “anything we spend time on is up for automation”, and that “the importance and value of thinking about our work and why we do it is … a distraction”. Tools that really support teachers will be ones that retain those important, satisfying, relationship-building parts of the job.

Working closely with teachers is key to ensuring tools are actually beneficial to them. Faculty went on to develop a proof of concept for providing feedback to students about their work. In the technical report about this process, their first finding is about the importance of engaging teachers throughout the process:

Crucial to the successes of the tool was the regular involvement of educators from the start of the process. Adopting an iterative approach to improving the tool through frequent feedback from a variety of user groups meant that tool could better meet the needs of teachers and students. This collaborative approach enables the AI’s capabilities to be enriched with expert insights, while also building trust with users. This was also important in the evaluation of the tool, with educators inputting into the development of the evaluation criteria, ensuring the assessment was reflective of educational standards and expectations.

It’s also interesting to note that the feedback from teachers on Faculty’s proof-of-concept tool was diverse. As I’ve written about recently, I believe this diversity is something to be explored and honoured rather than dismissed. Giving teachers options about how to use a tool will help them adopt it in ways that suit their situation and style.

Equally, we tend to choose things with short term proximal benefits over long term systemic benefits. I’d like to see evaluations of these kinds of new tools that didn’t only focus on whether it seemed useful to the target users, but asked groups to imagine what it would look like if there was widespread adoption, or adoption only in privileged pockets. Consequence scanning, in other words. (Later, of course, it’s important to do proper evaluations against a range of criteria, but if we want to move fast without breaking things, spending a bit of upfront time thinking through what might happen is really worthwhile.)

Anyway, I hope that a good part of the £4m data store work will include working closely with teachers to make sure that it’s used to build tools that support their actual, rather than imagined, needs, and does a bit of work to steer innovation towards tools that aren’t long-term damaging.

You said, we listened

Overall, I’m left with a frustrated feeling about the gaps between public attitudes and concerns around AI, and the government’s gung-ho approach to its adoption across public services. Because for all the substantial work that’s done understanding public opinion on AI, it doesn’t seem to cross the gulf into politicians’ consciousness, at least not to the extent it’s taken seriously.

I can see scenarios where people are simply resigned to having to adopt technologies that don’t really work for them (see how some people feel about the NHS App): rather than digital public services restoring trust in the state, in the way Peter Kyle argues they will, they could easily contribute to a growing distrust of and frustration with government. Scenarios where some might opt not to use public services, or share data, because they’re concerned about how it might be used. Scenarios where technology will be judged as a success because that’s the average experience, but these averages hide growing societal inequalities.

All of these scenarios are regressive in ways illustrated by the concerns of parents of SEND pupils and those in struggling schools in the public attitudes research. It is far more likely that tools are designed around people with standard needs, rather than those who need additional support, which means digital public services and AI tools are less likely to work for them. It is also far more likely for people who are already minoritised, marginalised and vulnerable to be at risk of (and be concerned about) being on the sharp end of (automated) decision making by the state, which makes the stakes around data sharing that much higher for them.

I do believe that it’s possible for new technologies to be progressive, to make public sector work more fulfilling, to reduce costs, and benefit us all. I’m heartened that Peter Kyle’s vision for technology in public services recognises that the government can and should shape technology, and that the government has to bring the public with it on this journey. But I do think for this to work, the government has to get a lot better at actually responding to not only public fears and misconceptions, but also the future the public wants. Doing more deliberation and co-design – crucial parts of working in service of the public and partnership with civil society – is a vital step to making this a reality.