Informed engagement: explaining AI in local government

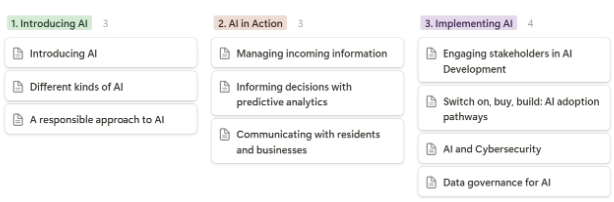

Late last year the team at Brickwall (who produced Connected by Data’s intro video) got in touch with us to ask if we might collaborate on a commission from the Local Government Association (LGA) to put together scripts for a series of videos intended to explain artificial intelligence. Drawing on desk research, interviews and a session at UK Gov Camp, we put together a proposed approach based on three sections: introducing AI, AI in Action, and Implementing AI.

Ultimately, and in order to fit with the existing Cyber Unpacked video series, the LGA team decided to take an approach based on each video trying to define specific AI terminology (e.g. ‘What is Generative AI?’, ‘What is an algorithm?’) that makes only limited use of our research. However, in line with our commitment to work in the open, I’ve written up some of the research, the rationale behind this earlier proposal, and the (partial and incomplete) resources generated.

You can find that all in this Google Doc (PDF version here) and I’ve picked out some of the key elements you will find below

What do local government stakeholders need to know about AI?

It’s hard to think of a member of local government staff who may not need some awareness and information about artificial intelligence, although why different staff want to know about AI, and the details of what they might need to know, varies significantly.

In Annexe 1 of the write-up you can find a list of user stories developed out of a GovCamp workshop and a set of interviews. Although far from an exhaustive list it illustrates the wide range of information and learning needs around AI in local government, including:

- Discovering and being able to use off-the-shelf AI tools, such as through better prompt writing;

- Understanding or setting organization policy on AI use;

- Assessing the capabilities of particular tools: and knowing how to manage their limitations;

- Identifying what counts as AI or not within the organization: and being able to describe AI use clearly to others;

- Knowing about the different risks that need to be managed: and the frameworks that can help to do this;

- Understanding how we might develop our own tools: or when existing tools will be ‘good enough’ to deploy;

- Exploring who to involve within a change process.

Meeting these needs involves offering both information, and critical frameworks to make sense of the fast developing landscape and data and AI.

Distinctions over definitions

There is no consensus definition of Artificial Intelligence, and many of the key concepts in AI are both moving targets (there was a moment when it might have made sense to explain Generative AI primarily in terms of text-related capabilities… quickly outdated as multi-modal models arrived), and hard to define precisely and concisely without making significant assumptions about the prior knowledge of the audience, or relying on a web of technical terms that each need their own definition.

This is why our proposed treatment for introducing AI is focused as much on what AI does, as what AI is, whilst paying attention to the distinctions between AI systems, and other forms of system that might be more familiar to local government practitioners, or otherwise simpler to explain.

For example, in our outline introduction to AI script, we draw on the distinction between an algorithm as an explicit set of rules for providing a service (such as a school admissions algorithm that might be based on distance from school, presence of siblings at school and other factors to decide who gets a school place) and machine learning applications in which a system has been trained to generate a set out outcomes (e.g. prioritization of case worker visits) based on a range of data points, but where the connection between inputs and outputs may not be easy to represent in terms of set procedure to be followed.

We also found that it is important to draw out the consequential distinctions between different kinds of AI, namely:

- Narrow AI – systems trained and used for a particular purpose

- General Purpose AI – systems pre-trained with various capabilities (e.g. language models) that can be applied to a wide range of tasks

Noting that many people have been exposed to media narratives around existential threats from various forms of frontier AI, or film-fictions about self-aware AI, we also found it was important to distinguish currently existing AI from the as yet unrealized, and contested concept of Artificial General Intelligence.

Lastly, and picking up on one of the user-stories surfaced in interviews about a desire to ‘avoid snake oil’, we identified a need to talk about AI as a marketing term that may-or-may-not actually refer to the integral use of machine learning or other AI techniques within a product or service.

In our proposed ‘Introducing AI’ section we also included a suggested script on ‘A responsible approach to AI’. Responsible AI is a big topic: taking in ethical, environmental, legal, security and social concerns. However, there are a number of comprehensive resources on Responsible AI in general, and elements of it in particular. Responsible AI has also become an established discourse in the UK public sector. Here, two distinctions are important to establish: (1) AI off the shelf is not necessarily responsible AI; (2) responsibility is a property both of products (how systems are built), and of processes (how they are adopted, used, monitored and managed).

AI in Action: use cases and examples

When it comes to understanding the potential uses of AI in local government, and the issues that different uses give rise to, we sought to adopt a somewhat cybernetic conception of a council or public body (inspired by Hood and Margetts work on Tools of Government in the Digital Age), and to think about the kinds of general actions involved in local public service delivery:

- Managing incoming information

- Delivering services and making decisions; and

- Communicating with residents, businesses and other stakeholders.

Using this framework, we can bring into video a wide range of examples of specific kinds of AI systems that might be used, and draw out issues that arise in the context of particular use cases.

| *✅ = we could easily find a live example of this | 🔘 = I’m not sure if this is actually happening in local authorities - we would need to research more.* |

A cybernetic taxonomy of local authority AI use

- Managing incoming information

- Image recognition

- Automatic numberplate recognition ✅

- Analyzing satellite images to detect fly-tipping ✅

- Facial recognition ✅

- Audio transcription

- Creating transcripts of meetings ✅

- Improving ‘Interactive Voice Response (IVR)’ phone lines ✅

- Interpretation (gathering input from speakers of other languages) ✅

- Video analysis

- Facial recognition ✅

- Monitoring footfall for public safety 🔘

- Automating recruitment or assessment interviews 🔘

- Text analysis

- Automatically summarizing or sorting incoming messages ✅

- Language translation (on incoming information) ✅

- Image recognition

- Delivering services and making decisions

- Customer interactions (for chatbots, only include advanced chat-bots that make use of large-language models)

- Using chatbots to provide information ✅

- Using chatbots to deliver transactional services 🔘

- Workload & case management

- AI-driven prioritization of cases 🔘

- AI-driven staff allocation 🔘

- Performance monitoring of staff 🔘

- Predictive Analytics

- Planning: Applying a pre-existing model to predict service demand ✅

- Planning: Developing our own models to predict service demand ✅

- Operational: Using a model to identify and target activity to risks (Social Care or Children’s Services) ✅

- Operational: Using a model to identify and target activity to risks (other services) 🔘

- Automated decision making

- Fraud detection ✅

- Making decisions about service eligibility 🔘

- Customer interactions (for chatbots, only include advanced chat-bots that make use of large-language models)

- Communicating with residents, businesses and other stakeholders

- Knowledge management & analysis

- Summarizing documents, transcripts or reports ✅

- Summarizing long email threads ✅

- AI-driven search and retrieval of organizational information (e.g. via Co-pilot) ✅

- Producing draft responses to queries in a CRM ✅

- Summarizing content for elected members 🔘

- Content creation

- Creating social media content ✅

- Creating website content ✅

- Drafting letters and reports ✅

- Creating images or videos ✅

- Creating content in other languages ✅

- Knowledge management & analysis

In the outline scripts we started to sketch out examples that might illustrate both the potential, and the pitfalls, of using AI in these different contexts.

Pathways to adoption

The third cluster of scripts we proposed focus on implementing AI, and seek to draw out the different pathways and considerations involved in procurement of AI solutions vs. locally developed solutions. In this section we also responded to cross-cutting issues of cybersecurity, and data governance: addressing the key issue that deployment of AI in government (at least narrow AI) rests significantly upon having good data foundations.

Finding the right guidance

We originally set out to identify further reading for each potential video, linking both to more in-depth explainers, and to official government resources that set out clear guidance or requirements for local government responses to AI.

In Annexe 3 of the full document you can find the list of resources we evaluated. For each one we made a rough judgment of the ‘shelf-life’ the resource might have, and the level it operates at.

We were struck by the relative maturity of guidance, yet the risk that much of it is both overwhelming and may be demanding to operationalize effectively.

A question of questions

One of the really useful things in the existing Cyber Unpacked series of LGA videos is that most end with clear questions that could be useful from both an operational, and a scrutiny and governance point of view.

We had a go at drafting potential questions to close out each of our proposed scripts: thinking in particular about whether the information given in the minutes before could equip officers or elected members to both ask, and engage with the answers, to these questions.

The draft questions from the scripts are collected below:

- Introducing AI

- Do we have a clear understanding of the problem or challenge that AI can help us solve?

- What wider impacts will the adoption of AI have on staff, services and communities?

- Different kinds of AI

- What data was this AI system trained on?

- What data is fed into this system when it operates?

- How can the outputs be controlled?

- A responsible approach to AI

- Who is affected by particular uses of AI?

- How are we implementing best practices of Responsible AI?

- AI for managing incoming information

- Are we being transparent with affected stakeholders about our AI use?

- How are we evaluating the costs and benefits in each use-case?

- Informing decisions with AI

- What data was this system trained on? Are we confident in the quality of our data to drive good predictions?

- How do predictive tools integrate into our wider decision making? Are we empowering team members to use the insights from predictive analytics to take, and be accountable for, better decisions?

- Communicating with residents, businesses and stakeholders

- How do we maintain quality control and accountability for AI generated content?

- Are we transparent about when we are using AI to create content or manage communication?

- AI and Cybersecurity

- Do we have the required skills to understand and respond to cyber-security risks of the AI tools we are exploring?

- How are we monitoring and reporting on any AI-related cyber-security issues that arise?

Fragmentary scripts

We wrote a series of script fragments, intended originally to inform expert script-writing by Brickwall. Because of the direction the client took the work, these were not fully developed, but are shared in the full document in their fragmentary/unfinished form as part of working in the open.

Where next?

This post acts as an archive of a path not taken: though at least some of this material may be reflected in parts in the upcoming LGA videos. Consider this under a Creative Commons Attribution license should any content be useful (credit to: Connected by Data).

We are exploring at the moment whether we might, in future, have a capacity-building offer for local government. I’ve also been talking with Hawkwood Centre for Future Thinking (where I currently hold an informal fellowship) about developing a ‘Perspectives on AI’ training programme that may build on this research.

If you’ve got other ideas for where this could all go, do get in touch.

Annexe: Script fragments

We wrote a series of script fragments, intended originally to inform expert script-writing by Brickwall. Because of the direction the client took the work, these were not fully developed, but are shared here in their fragmentary/unfinished form as part of working in the open.

Introducing AI

AI in Action

- 4. Managing incoming information

- 5. Informing decisions with predictive analytics

- 6. Communicating with residents, business and stakeholders

Implementing AI

- 7. Engaging stakeholders in AI development (limited fragments)

- 8. Switch on, build, buy: paths to AI adoption (partially developed)

- 9. AI and cyber security

- 10. Data governance for AI (no script developed)