Many of us – in civil society, industry, academia and elsewhere – criticised the narrow, ‘frontier’ focus of the UK’s AI Safety Summit. So, as part of the AI and Society Forum – an unconference running alongside the AI Fringe on Tuesday 31 October – CONNECTED BY DATA ran a workshop inviting people to come up with their own, alternative agenda for Bletchley Park.

About the session

We began with our own prior assumptions about summits: namely, that the ‘event’ is merely part of the process, with important work having happened beforehand to get people aligned, the ‘event’ launching an agreed statement and some other commitments, and the longer term work emerging from the summit also being important.

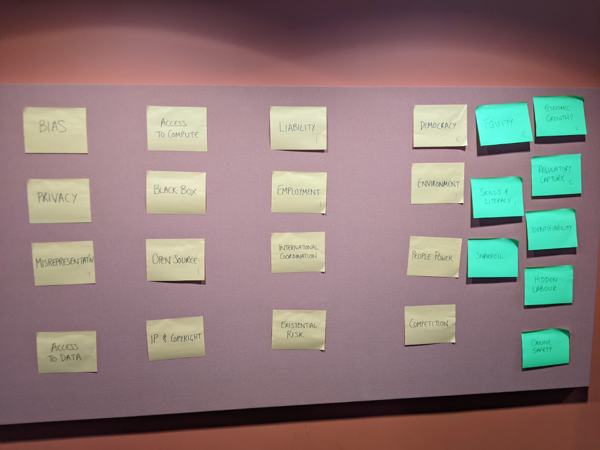

Given the short time available (just 45 minutes) we suggested 12 possible starting points for an alternative summit – the 12 ‘governance challenges’ proposed by the Science, Innovation and Technology Committee in their interim report on AI governance. These range from bias, privacy and misinformation, to infrastructural issues like access to data and compute, to the impact on work, to existential risks. We added a few of our own – on the environment, democracy, people power and competition.

The 12 AI governance challenges suggested by the Science, Innovation and Technology select committee - and some additions from our workshop

People at our workshop felt, on the whole, these challenges weren’t human enough – they were about things, not people. They suggested additional challenges: equity, economic growth, regulatory capture, skills and literacy, identifiability, snake oil, hidden labour, and online safety. We asked everyone to vote on one summit theme they’d like to develop. The five selected were all additions to the original select committee list: people power, equity, democracy, regulatory capture and economic growth.

We asked each group to think about:

- The headline they wanted from their alternative Summit

- The work that would need to go in beforehand to achieve that headline or objective

- The future work that should emerge from the Summit.

The alternative summits

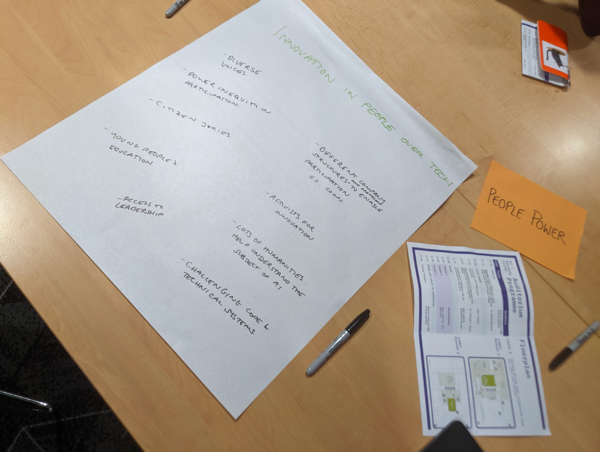

The people power summit wanted the headline of ‘innovation in people over tech’ – if we care about AI, we should care about people. They were concerned that there is a power inequity: activists, civil society, and other diverse voices have a lot to say but tend to be excluded. While the humanities can help us understand the subject of AI, only STEM subjects (Science, Technology, Engineering and Mathematics) were getting a look in. Citizens’ juries, access to leaders, young people and education, activists for innovation, challenging code and technical systems, and innovation around different company structures and protocols (e.g. cooperatives, rather than just shareholders having a say) were all discussed.

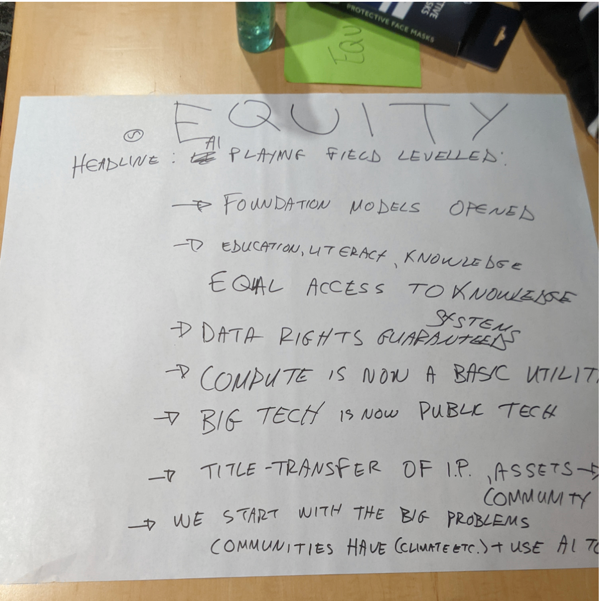

The equity summit noted that ‘Five Guys is a great burger chain, but not a great way to run the world’. The headline they wanted was ‘AI playing field levelled’, with foundation models opened up; equal access to education, literacy and knowledge systems; data rights guaranteed; compute becoming a basic utility; title-transfer of intellectual property and assets to communities; and big tech becoming public tech. We should start with the big problems communities face – such as climate – and, with input from those communities, use AI to fix those problems, rather than simply serving the five guys at the top.

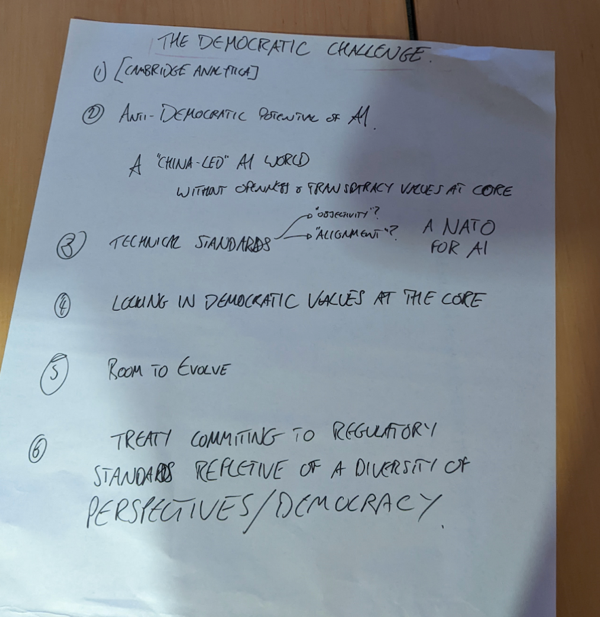

The democratic challenge summit made six key points. They started by citing the stories around Cambridge Analytica, and how people’s data may have been used. They noted the anti-democratic potential of AI, positing a China-led AI world without values of openness and transparency at the core, a ‘rabbit hole’ that would not align with western, liberal democratic standards. A discussion about technical standards (and ‘objectivity’ and ‘alignment’) prompted them to think about a (non-warfare-related) ‘NATO for AI’ reflecting a diversity of perspectives. The fourth point was the need to lock in democratic values at the core of the approach to AI – and the fifth, to give some room for the approach to evolve. Their final point was wanting a treaty committing to regulatory standards reflective of a diversity of perspectives and democracy.

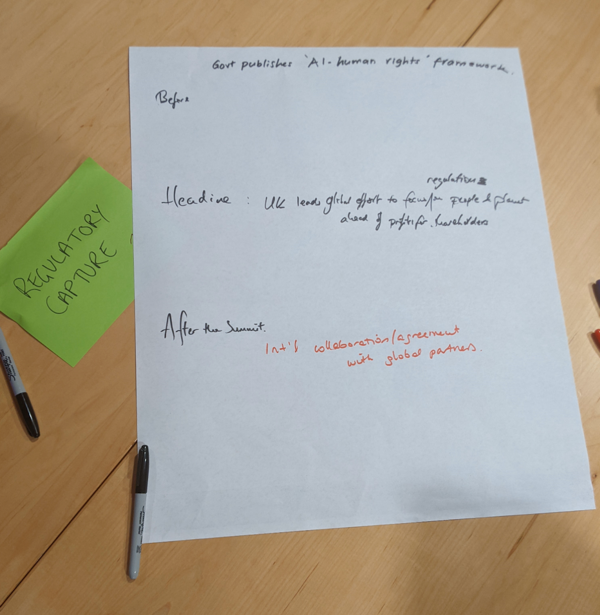

The regulatory capture summit (very much against big tech capturing the regulatory system, not in favour) wanted the headline: ‘UK leads global effort to focus regulation on people and planet ahead of profit for shareholders’. They wanted the government to publish an ‘AI human rights framework’, and for the summit to prompt international collaboration and agreement with global partners.

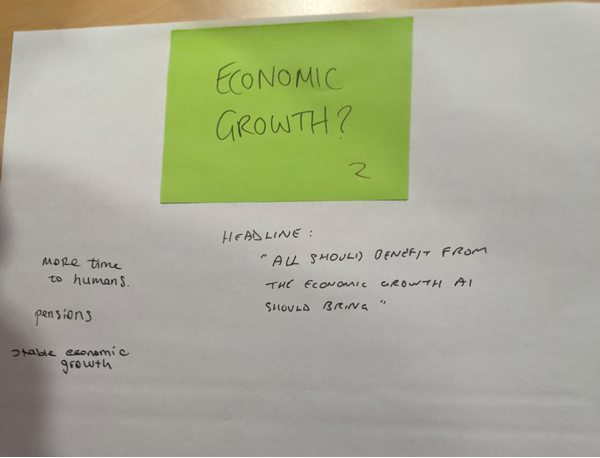

The desired headline from the economic growth summit was that ‘all should benefit from the economic growth AI should bring’. The summit organisers underlined the need for there to be some stimulus for economic growth, not something in common supply in recent years. Recent waves of technology had brought growth, if not equitable growth. Such growth should also be stable, and give more time back to humans – though we need to be cognizant of the risks brought by displacement.

Concluding thoughts

We asked everyone to summarise the discussion to provide some lessons for the actual Bletchley Park Summit in five words:

- “I don’t feel represented there [Bletchley]”

- “I agree”

- “Harness power of activism”

- “Change is not utopia”

- “People first”

- “Resist AI”

- “AI by democratic governance”

- “Safety is the bare minimum”

- “Regulatory independence”

- “Regulation regulation regulation”

- “Quality standards for AI”

- ”This isn’t unfixable yet”

- “Please make this work well”

- “Reality’s ugly and needs change”

- “AI discussions dangerously polarised”

Overall, one of the main themes throughout was centering the discussion about AI on people, not technology. As the AI and Society Forum tagline reads: let’s make AI work for eight billion people, not eight billionaires.