Connected Conversation: Impact Assessments and the Data Protection Digital Information Bill

Described as an “essential part of [a data processor’s] accountability obligations” by the ICO, Data Protection Impact Assessments are intended to support the identification and preemption of harms prior to data processing, and are a key piece of evidence for effectively contesting processes or decisions by companies, the government and other data processors.

As with other aspects of the Data Protection Act 2018 (implementing the GDPR) the Data Protection and Digital Information Bill rolls back the strength of DPIAs, replacing it with a narrower provision for the ‘Assessment of high risk processing’.

As the Bill heads for the House of Lords on the 19th of December, CONNECTED BY DATA convened a conversation focusing on data impact assessments.

Adam, CONNECTED BY DATA’s Campaigns and Policy Officer, kicked off the session with some scene setting. This started with reference to the EU AI Act, which at the time was entering the last days of the trilogue between the EU’s Commission, Parliament and Council. One of the many hotly contested issues during the Act’s journey has been provisions for Fundamental Rights Impact Assessments (FRIAs). There have been wide ranging positions on FRIAs, with the European Parliament setting out a widely scoped proposal, while the Spanish presidency of the EU Council sought to broker versions of FRIAs that limit the obligation to public bodies, or fold them into other compliance measures within the Act rather than standalone processes.

A deal of sorts has been struck on the Act. At the time of writing (13.12.23), the text has yet to be published and further wrangling seems inevitable, the status of FRIAs and how they read across other parts of law are uncertain.

With uncertainty and concerns similarly provoked by changes to provisions for impact assessments within the Data Protection and Digital Information Bill, colleagues from the Institute for the Future of Work (IFoW) and the Public Law Project (PLP) presented their analysis and work to the attendees.

Algorithmic impact assessments for ‘good work’

Kester Brewin set the IFoW’s work on impact assessments in the context of a wide range of research and policy development on the current status and future of work. Emphasising that the provisions of the DPDI Bill need to be read together when considering the effect on work and workers, the IFoW is targeting a number of amendments. This includes an amendment to provide for an additional requirement to establish a process for consultation where there is automation that impacts key areas, like access to work, termination of work, pay, T&Cs, equality, and health.

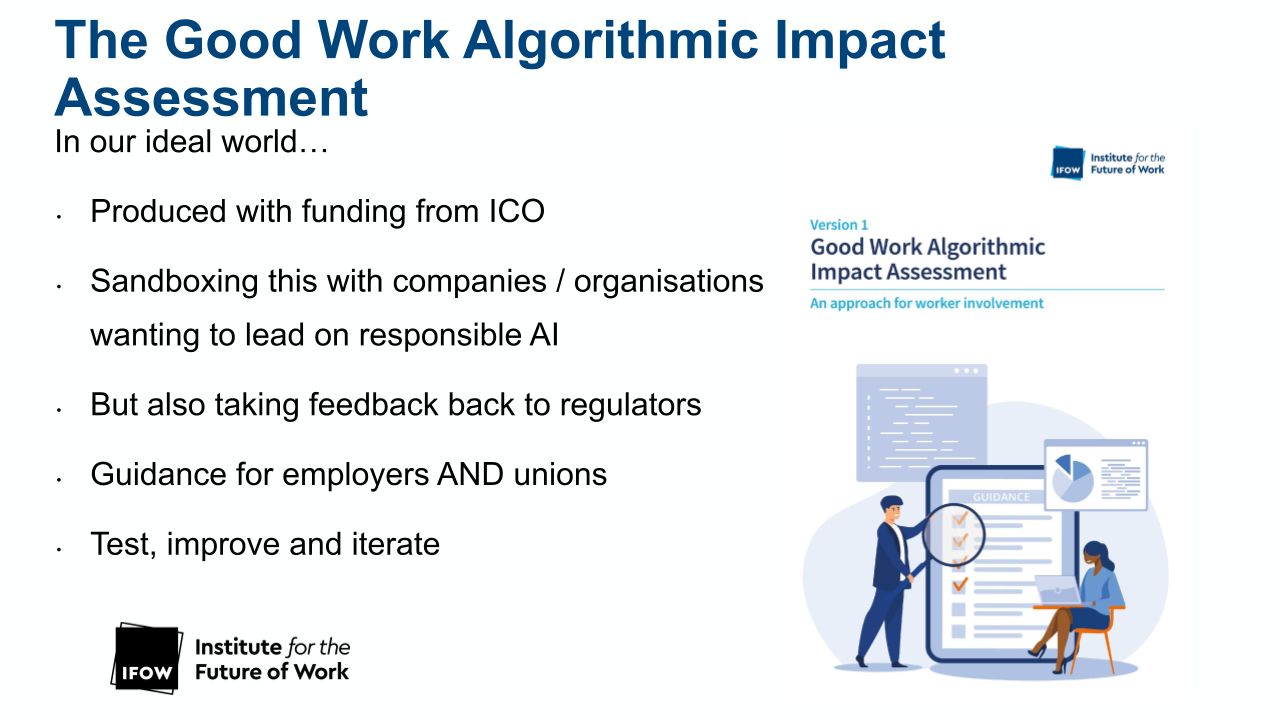

On impact assessments specifically, Kester discussed the opportunities to widen the scope of impact assessments beyond strict data protection conventions. The IFoW has been developing and testing Algorithmic Impact Assessments (AIA) that provide a framework for comprehensive, pre-emptive evaluation of algorithmic systems and have regard to known automation archetypes such as augmentation and displacement.

Credit: IFoW

Credit: IFoW

Kester stressed that these should be proportionate to the risks and impacts of the systems, and would need to incorporate the right to notification and information for affected workers. The IFoW is pushing for an amendment within the DPDI Bill to establish a duty to conduct an AIA, and for the Secretary of State to issue regulations on what an AIA should entail for various actors in the AI value chain. This includes duties to evaluate risks, impacts, mitigations and adjustments to be taken and to publish the assessments in a timely way and review them on an ongoing basis. The absence of these latter requirements from the DPDI Bill is exactly what many civil society organisations are trying to fight for.

Why DPIAs increasingly matter in public services

Data Protection Impact Assessments (DPIAs) may not be the most high profile matter of public concern in relation to the DPDI Bill, but Mia Leslie - a Research Fellow at the Public Law Project - underscored their significance and power with a focus on the public sector and services.

In particular, Mia emphasised the aim of DPIAs to be pre-emptive of violations of rights and discriminatory or harmful outcomes. In the event that harm does occur, they can then form the basis of legal challenges that can help achieve remedy for people and drive better practice. Particularly as they highlight what was known, suspected or considered at the time and whether anything was missed.

Mia cited the high profile court case Bridges. In 2020 the Court of Appeal held that had the South Wales Police conducted a DPIA correctly they would have considered that their proposed use of facial recognition technology interfered with the right to privacy enshrined in Article 8 of the Human Rights Act.

Government analysis found that the majority of responses to the consultation on ‘Data: A new direction’ (the government’s public consultation on reforms to the UK’s data protection regime) stated that DPIAs were helpful in “identifying and mitigating risk, and disagreed with the proposal to remove the requirement to undertake data protection impact assessments”. Despite this, the Government is seeking to limit the scope of impact assessments in the DPDI Bill, under Clause 20.

The Bill currently lowers the minimum requirements on data controllers, to only summarise the purposes of the processing and drops the need to assess ‘proportionality’ and ‘necessity’. The PLP is highlighting that this a backward step in the context of increased datafication and the introduction of AI into public services, especially while other aspects of the rights-based regime of GDPR are being undermined.

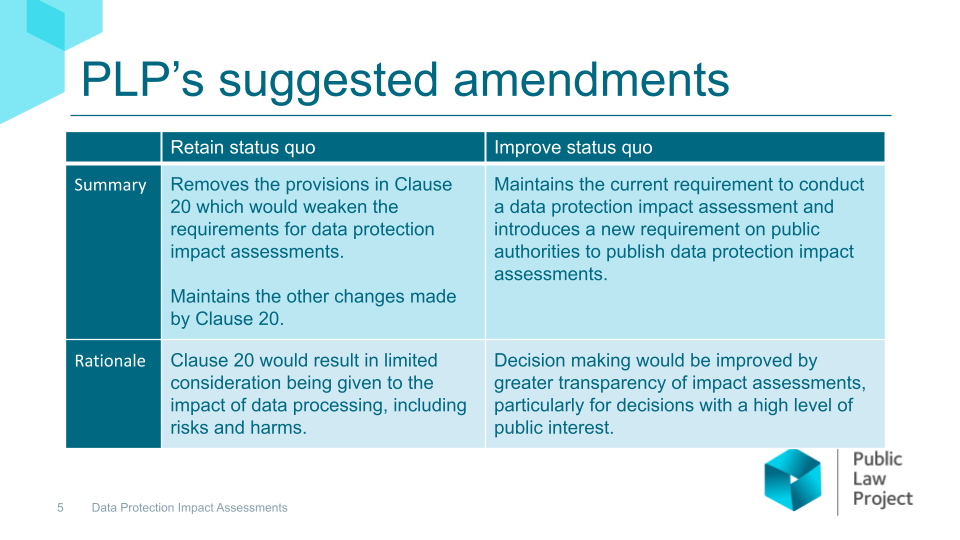

The PLP is proposing a number of amendments, including a simple retention of the status-quo as well as a more extensive provision to require public authorities to publish DPIAs and boost meaningful transparency and contestability.

Credit: Public Law Project

Credit: Public Law Project

Digging into impact assessments

The attendees then worked in breakout sessions to dig deeper into the issues, facilitated by Mia of the PLP and Kester of the IFOW.

In the breakout focusing on the public sector, discussion ranged from:

- how the weakening of the DPIA regime could affect the EU’s adequacy decision (possibly, but as part of the politics of the decision rather than the technicalities),

- whether there are examples of good practice in DPAIs (probably! But we don’t know given they are not routinely published and only surface in contentious situations. This supports a publication regime),

- how the disruption of established practices and standards could lead to uncertainty, and could result in wider divergence in how different public sector bodies undertake impact assessments.

In the breakout focusing on the future of work, discussion touched on:

- the prospects for impact assessments to look more broadly e.g., to include equalities and intervene at a system level not just an individual level

- that work and education are areas where most people come face to face with algorithmic systems

- that a consent-based model is not appropriate given the power dynamics or lack of viable options to withhold consent

Next steps

Civil society organisations are looking to the House of Lords to influence the DPDI Bill, with the Lords’ Second Reading taking place on 19 December. The bill will move to the Lords’ Committee Stage in the week of 21 January 2024.

Briefings produced by the Public Law Project and Institute for the Future of Work are accessible in Connected By Data’s DPDI Bill resources document, which is regularly updated.

If you would like to know more or share your work with an active group of civil society organisations, Connected by Data convenes a fortnightly Data and AI Policy call. Get in touch with Adam to find out more!