People's Panel on AI Bulletin 3

This is the third bulletin from the People’s Panel on AI, sharing observations and insights from Connected by Data as an observer. You can find earlier bulletins here.

Today, as well as joining plenary sessions at the AI Fringe, the People’s Panel members have been engaged in lots of small-group work: from hands-on learning with generative AI tools, to one-to-one conversations with scientists at the Hopes and Fears lab.

Image: Members of the People’s Panel in AI in discussion with scientists at the AI Hopes & Fears Lab

Panel members will be bringing their learning and perspectives together in deliberation sessions over the next few days, but in the meantime, here are a few selected insights and observations I picked up today:

- Learning about limits: understanding what AI cannot do, may help us to be more hopeful about its potential.

- Starting from social good: can we flip the conversation? Priority questions from the panel place the emphasis on working out where AI fits into the kind of lives people value.

- Hopes and fears: new themes on climate, education and public/private sector use

This bulletin reflects just a few of the discussions from today, as captured by the Connected by Data team. A full report from our deliberation facilitators based on detailed session notes and recordings will be available shortly after this week.

What do you think so far? We have an evaluator working with us to capture learning from the People’s Panel, and help us adjust as we go. We are keen to get your views and would be really grateful if you could find a couple of minutes to complete this anonymous survey for our independent evaluator.

Learning about limits

Having time for hands-on engagement with generative AI tools, and time talking with scientists working on cutting edge applications of AI in health, education and work, as well as researchers thinking critically about truth, fairness and impacts on everyday life, gave the panel time to learn about the potential, and the limits, of different tools.

One of the big surprises for many was how generative AI models don’t have ‘live’ knowledge, but are trained on data at a particular point in time. This brings into focus questions over the data used to train models.

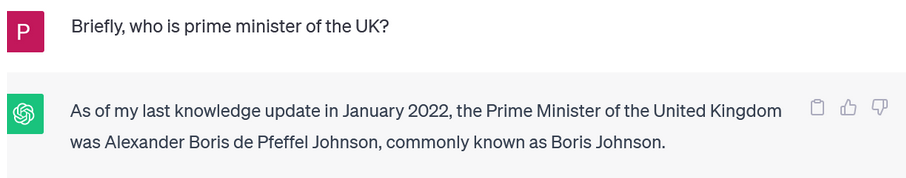

“ChatGPT can’t tell us anything after 2022. That really surprised me.” Panel member

Image: Screenshot from one of our ChatGPT sessions this morning: revealing the limits of the model.

The potential of AI to transform medical treatment was a key theme in the early hopes we explored yesterday. Today, after talking with scientists in the Hopes & Fears labs, panel members talked about having a greater understanding of the potential and limits of medical AI. “It learns from the data that’s already there - but if there isn’t data, it can’t be better than an existing doctor.” “The main thing it seems to deliver is time saving.”

While a first encounter with AI tools could increase fears, finding out more about them helped to put concerns in context. As a facilitator summarised: “Understanding the limits seems to help us be more hopeful.”

Starting from the Social Good

The panel attended the ‘Public Voice and AI’ session at the AI Fringe, which explored the case (and challenges ahead) for sustained structures and practices of public engagement around AI - including a shout-out to the People’s Panel from Jack Stilgoe.

In the Q&A People’s Panel members put the question: “Aren’t we approaching this the wrong way around? Shouldn’t we start from talking about what is good for society - rather than starting from technology?”.

This evening break-out groups explored the kinds of questions that could provide a starting point for thinking about how AI should fit into everyday life, to complement, or challenge, questions that ask how everyday life might need to change to accommodate AI:

- Where do we want to live?

- How do we want to work?

- How can we deliver better education?

- Do we need school and education?

- How do we want to travel?

- How can we deliver health, happiness and choice?

- How can we address the challenge of climate change?

- How can we collaborate together globally?

How is the conversation changed when we take these as the starting point?

For example, reflecting on sessions around the creative industries, panellists also questioned whether the efficiency gains from AI tools helping write scripts, or create movies, are worth it, if this leads to job losses or loss of space for creativity. Thinking about the progress the public want to see, and how this fits with what AI can currently offer, may provide alternatives to a sense from some that people are being presented with “progress for progress sake”.

Hopes and fears

The Panel ended the day with reflections on how, after more than a day of exposure to debates and discussions on AI, their hopes and fears have stayed the same or changed.

“I’m still on the fence about AI: but I now have different hopes and concerns.” Panel member

In addition to new perspectives on the use of AI in medicine, discussions today focussed more on the topics of:

- Education - both concerns about how AI might disrupt learning, and the need for a focus on education and empowerment from a young age, to equip everyone with the skills to understand, interrogate and navigate AI.

- Climate change - “it doesn’t really feel like we’re using AI in the right ways when it comes to climate change” reflected one panel member, and others noted the examples shared the Fringe of AI use in climate action felt very limited. A number of the points panel members made point to the question: Is the path of AI use we are on climate resilient?

- Public and private sector - “what could be good in the public sector, could be dangerous in the private”. We saw the start of questions about who owns, controls and deploys AI today, and this may be a theme that is explored more.

“One thing I learned today is that AI is not going away. I’m turning to embrace it and learn about it, instead of ignoring it and hoping it will go away.”

What’s next?

As we were having our discussions at the AI Fringe in London today, the Bletchly Declaration was published from the Government’s AI Safety Summit in Milton Keynes. Tomorrow, along with attending more sessions at the AI Fringe, and talking to experts about the different roles of government, industry, academia and civil society, we’ll be starting to look at the outputs from Bletchly, with the Panel developing their response and review.