Some may say the public can’t be expected to understand complex issues like AI, issues of governance and highly tech filled spaces. But often this statement can come from those who might have to give up some power to create space for public participation in policy making, system design and implementation. And power is a tricky thing for anyone to relinquish.

The People’s Panel on AI, a sortition-selected group of 11 members of the public, came together to participate in a deliberative review of the UK’s AI Summit Fringe event in late 2023. The results prove that, contrary to the opposite concern, not only can the public (in some cases with no previous knowledge about AI, or just uninformed “fears” about “robots taking over”) understand AI - they are able to do so quickly, for relatively little cost, and with meaningful outcomes. The panel demonstrated that whilst the public may not immediately understand highly technical details of how AI algorithms are coded, they can quickly grasp how its implementation impacts on day to day life - their lives, their families and friends lives. In fact, members of the public are exactly the right people to be asking the right questions of AI developments and implementation - generally approaching the discussion from a perspective of what does society want, rather than what could AI do? And the experts that experienced this public participatory practice in action may be surprised by what they learn.

“What was very humbling is, if you bring 11 people from ordinary life into these discussions, and you get to sit and listen to them about the issues that matter to them, you realize how in the weeds we get and actually listening to all of you talk about how is this going to impact on education security? How is this going to impact on my job? How is this going to affect my neighbour who is disabled? You realize that these are the very human things that people want to talk about. And this is how I feel we should be framing our wider discussions about artificial intelligence.”

Attendee at the People’s Panel on AI “verdict” session

The People’s Panel on AI spent four intense days in London hearing from a range of expert witnesses, participating in a Hopes and Fears Lab, listening to a breadth of panel sessions at the AI Summit Fringe and deliberating on it all before ending the week in a “verdict” session where seven recommendations for the future development and implementation of AI were made.

In our Connected Conversation entitled ‘Involving the public in AI policymaking: Experiences from the People’s Panel on AI at the UK AI Summit Fringe’ we brought together members of the Panel, representatives from the Advisory Group with an open audience to explore what lessons we could learn from the People’s Panel on AI process. Our speakers were:

- Tim Davies, Research and Practice Director at CONNECTED BY DATA (Host)

- Janet, Margaret and June (Members of the People’s Panel on AI)

- Henrietta Hopkins, Director at Hopkins Van Mil (Delivery partner)

- Lex Harrison, Director at Milltown Partners (Advisory Group member)

- Octavia Reeve, Associate Director at Ada Lovelace Institute (Advisory Group member)

- Richard Milne, Deputy Director at Kavli Centre for Ethics, Science and the Public (Advisory Group member)

It was clear in all the discussions that both the process of deliberation and the outcomes are still having a ripple effect on discussions about policy making. Lex from Milltown Partners (who ran the AI Summit Fringe) shared an update on their recently published report on the event, ‘Perspectives from the AI Fringe’ including a section on the People’s Panel on AI. Having grown organically to complement the Government’s Global AI Safety Summit at Bletchley Park by broadening the conversation - the Fringe began as an aim to bring industry, academic and civil society voices together and to talk about AI impacts on society now (rather than purely existential harms). Lex, and his teams’ openness and engagement to involving a deliberative public participation process as part of the Fringe helped to demonstrate the strength in deliberation focusing on a specific moment and the importance of public perspectives.

Deliberative review is of course one of a range of public engagement tools, and one that can complement other activities. Octavia from Ada Lovelace Institute, who was a member of the Advisory Group, reflected in the conversation that this blending of tools is important. There is a place for large scale surveys, capturing attitudes from large numbers of people, but that deliberation offers the richness, the focus of conversation to engage with more complex issues and most powerfully in areas of policymaking where, for example, there may be a need to consider nuanced issues, trade offs to be made between options, and considerations that need grappling with. She shared some recent research published by Ada Lovelace Institute that demonstrates too how people’s attitudes to AI change when being asked about different uses / technologies so deeper engagement around specifics brings a fuller understanding. Different approaches to engagement also have power structures. Deliberation is a “high power” process that, through the conversation, the consideration of information / evidence and iteration, results in a deeper and broader understanding of the public’s judgement (whereas surveys would be “low power” and the insight is more one dimensional). Perhaps it is this space for judgement and the high power process that makes policy makers less inclined to engage public in deliberative processes. Henrietta from Hopkins Van Mil - our delivery partner who ran the whole deliberative process - underlined the depth of engagement being important. The People’s Panel on AI was created in 6 weeks from design to delivery and Henrietta talked about how the speed of creation was very distinct to other processes that they had run. She was concerned that it wouldn’t do deliberation justice - but confesses this turned out not to be the case. In many ways the speed created momentum and the intensity of the week helped build trust within the group - not to all have to agree but through creating a space to engage with the content and share views with each other.

“It [the People’s Panel on AI] was extraordinary…done carefully and with no principles [of deliberation] thrown out with the speed. In fact I stuck to them strongly…..I’m now trying to integrate “lightning deliberation” in other work”.

Henrietta Hopkins from Hopkins Van Mil, our delivery partner

At the end of the process Hopkins Van Mil produced their report of the deliberation outcomes and we authored our reflective piece on the experience and how to build on the lessons learned to involve the public in AI policymaking. We suggest:

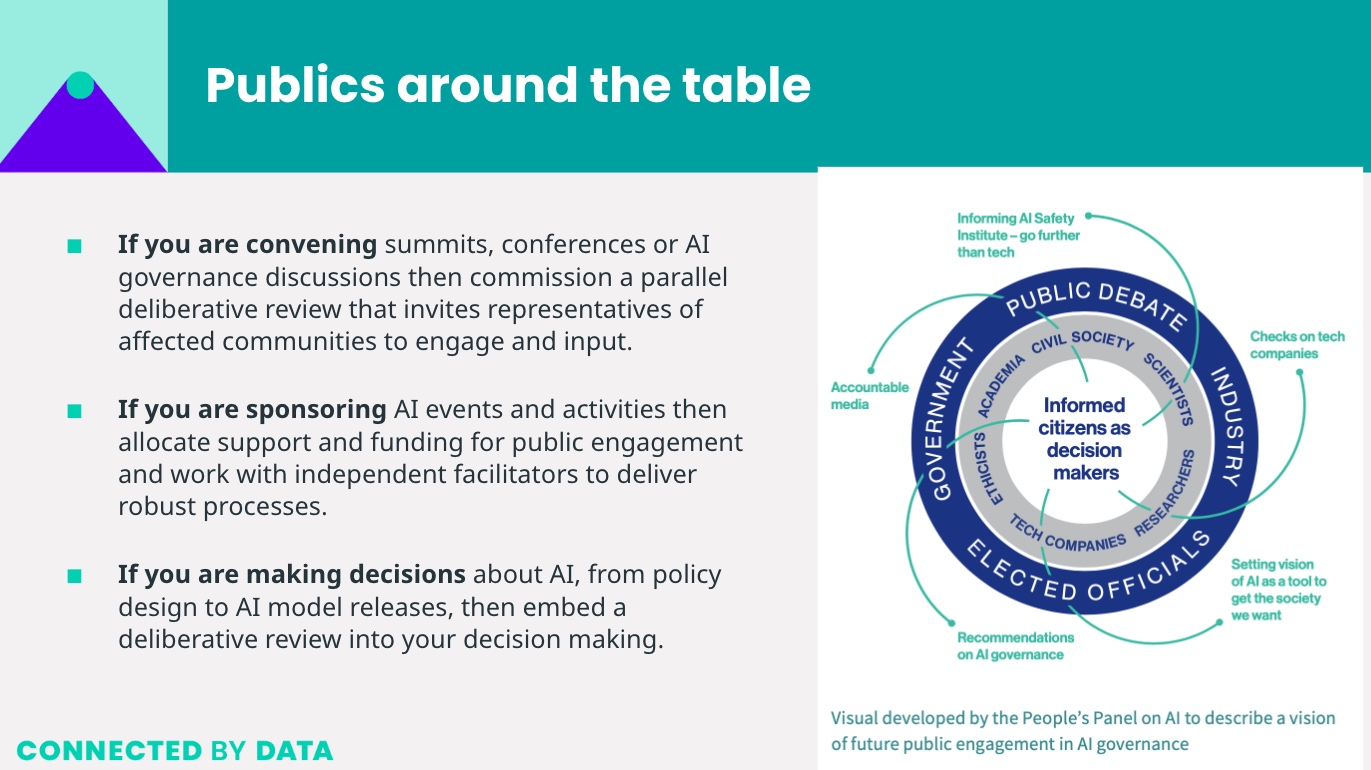

There was power in creating the deliberative moment, where people are listening and talking to each other, by focusing around a summit and this can be carried in other key points of policy making discussions and developments. Using deliberation in this way, rather than ongoing “assemblies” may not result in the public as decision makers but certainly as voices and perspectives to influence the policymakers. Doing deliberative review in this way isn’t free but as a percentage of the total event costs, it is a small amount and CONNECTED BY DATA encourages organisations hosting appropriate events to build the costs and process into their budgets and plans.

Richard, from the Kavli Centre that ran the Hopes and Fears Lab alongside the AI Summit Fringe, reflected on how those listening to the deliberation, observing the process or hearing the outcomes are often surprised by how insightful the public’s questions are. He observed that the conversation almost isn’t about the subject being discussed (e.g. AI) but about people’s lives - and people are the expert in that. Janet, one of the People’s Panel on AI members, agreed:

“We didn’t stop talking about AI. It was exhausting. It overtook us. A number of us refer to the experience as life changing as the impact hasn’t gone away. During the week we were waking up at 3am and making notes about what we wanted to ask the next day. … In one session someone suggested to us that we didn’t know the tech and ordinary citizens can’t understand [ AI ]. I think the panel will come up against this all the time but the panel can ask the questions. What’s the value? Does society want it?”

Janet, member of the People’s Panel on AI

And those open to listening were transformed too:

“I think this is an extraordinarily valuable gift to all of us in the tech community and to the rest of the country, the entire country, and trying to figure out who we want to be and how we want to work with these technologies. … I was personally particularly struck by the world of work recommendation, and thinking about safe transitioning and leaving no one behind. We have talked a lot … about the dangers and the need to stop and slow down and be careful and those conversations are important. But I think also the conversations of the opportunities and where we can make things so much better in daily life and work in how we do the things we do. And I really liked that that recommendation was specific and tangible with training and taxes.”

Attendee at the People’s Panel on AI “verdict” session

We concluded this Connected Conversation by posing the question - “What is the next challenge we need to meet?”

- How all the different conversations about public involvement are combined, seen and work all as elements of a system.

- There’s a critical question about how we factor in the international context; decisions taken outside of the UK that might have significant impact on citizens here.

- Making sure the People’s Panel on AI recommendations are taken seriously by policy makers - and the conversation continues.

- Be optimistic about the future of public voice in AI. We can see the OECD counting around 700 recent citizens’ assemblies, or the citizens’ council that Belgium is organising currently under its EU Council presidency, and the effect of participatory methods in shaping policy on abortion law and drug use.

If you’re interested in the People’s Panel on AI please visit our project page which contains links to all the reports mentioned above plus copies of the Daily Briefings we issued each day, a short video about the process and an independent evaluation report (funded by Ada Lovelace Institute). This project was made possible by the generous support of our Advisory Group and funding from Mozilla Foundation, the Accelerate Programme for Scientific Discovery, and the Kavli Centre for Ethics, Science, and the Public, to all of whom we are thankful. If you are interested in exploring how you might run or support a future deliberative review, get in touch.

A copy of the slides supporting this conversation are available to view here.